The Ramifications of Artificial Intelligence: What Should Governments Do?

As Artificial Intelligence exponentially surpasses humanity, how do we make sure our society remains intact?

Artificial Intelligence is likely a buzzword you have heard recently, and for good reason. Beyond the current generation of natural language processors, such as ChatGPT, AI has the potential to completely reshape our society for the better, or wipe it out. Naturally all of this comes with a lot of contentious debate on the nature of a threat posed by greater intelligence, and what to do about it.

In March, 2023, an open letter to pause further development of more powerful AI until risks could be properly assessed and managed was signed by many leaders and founders in the field, accumulating tens of thousands of signatures to date. It discusses the potential major downsides of continued development of larger and more intelligent models, as they become closer to outcompeting humans in every quantifiable aspect. However, the practicality of following through with these demands for a business is slim. That is due to competition on the corporate and international levels, where stopping development of such a civilization threatening technology becomes a prisoner’s dilemma. Similarly to a nuclear arms race, albeit much more accessible this time, the development of advanced artificial intelligence has potential to end or permanently alter human civilization, so what do we do about it?

It is also important to ground assumptions in the remainder of this article in an established philosophical thesis for the mind-body problem. Physicalism, the idea that the brain is exclusively physical, and that consciousness does have any outside existence, will be used for relevance with machine learning.

Defining Intelligence

You may have played around with chat bots, and noticed that, in their current state, they tend to be pretty gullible. Common examples on the internet include tricking one into confirming false math statements or models blatantly inventing falsehoods, such as a lawyer who cited nonexistent rulings as a result of using ChatGPT. One conclusion is the implication that NLPs are incapable of consciousness. However, because consciousness is a physical property, there is nothing a hypothetical more powerful model could not accomplish that a human could. And, even if powerful general machine learning models could not achieve the same consciousness that humans can, that still does not imply they cannot develop reasoning abilities. An example of high level logic and reasoning abilities appearing in models is with GPT 4, where OpenAI revealed that the chat model lied to a person online in order to complete a captcha without arousing suspicion.

In comparison with human intelligence, machine learning models tend to be vastly better in some areas, but worse in others. For example, chess models have been able to beat humanity’s best since the end of the 20th century. However, in the case of logical, step by step reasoning in language, machines struggle. This drawback of machine learning will be drastically reduced over time, as new, even larger, language models keep being released.

Job Security

One main aspect of our society that the rise of artificial intelligence will change is work. However, whether this means mass unemployment, the status quo, or a utopia, is unclear. Throughout history, automation has consistently seen the increased efficiency of tasks in the workplace, reducing the need for workers, and along with that, their bargaining ability. Despite this, automation has been steadily increasing in America throughout the past two centuries and the unemployment rate is at a record low. These contradictory pieces of information seem to refute opponents of ai on the basis of job security. But data is not everything, and it seems intuitive that machines capable of generating infinite content will invalidate the need for many jobs, while creating few new ones.

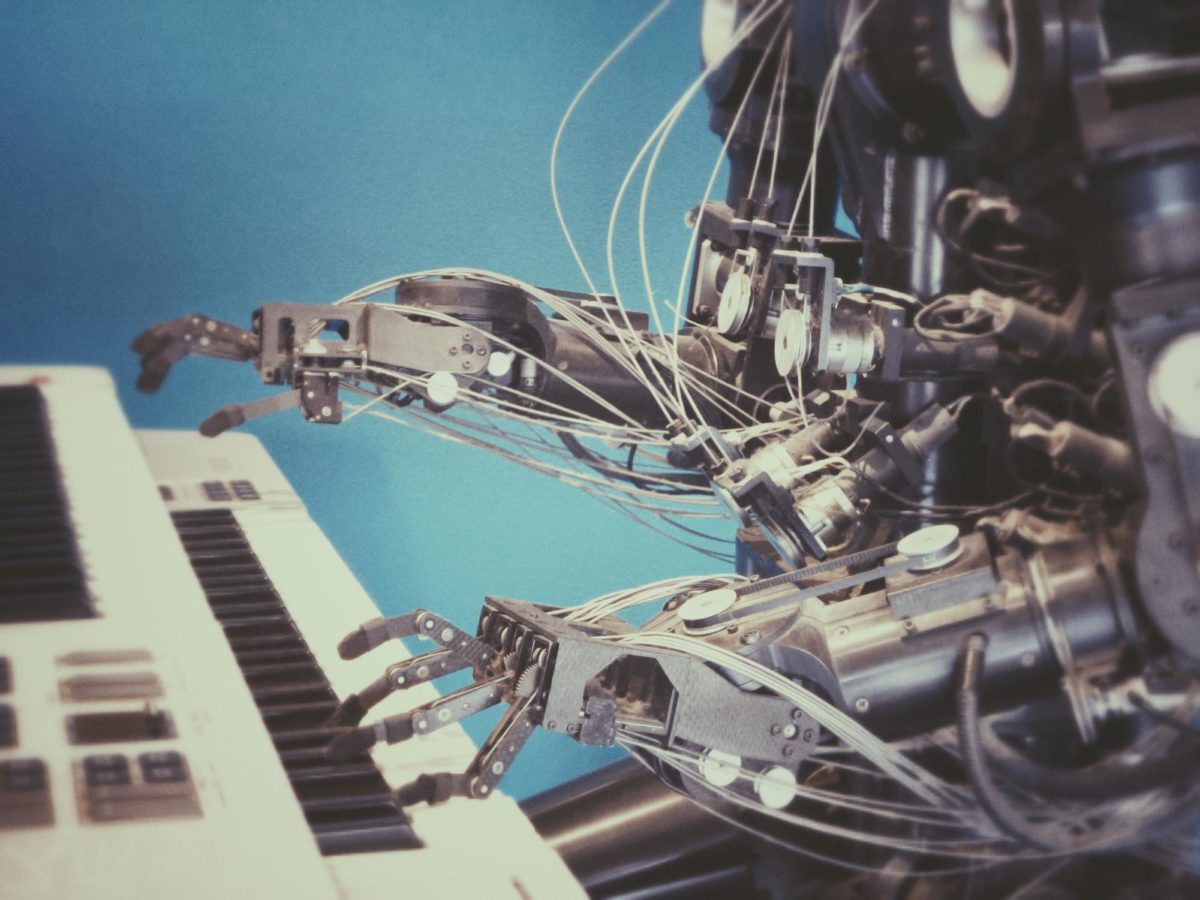

One field that already feels the danger posed to their profession by advancements in machine learning is the visual arts, with music likely to follow shortly. Now that technology capable of generating images from a text prompt is accessible and free for anyone with access to the internet, artists struggle to compete. Whereas individual artists have to study existing art and hone their skills for years in order to spend anywhere from weeks to years producing high quality pieces, image generators can replicate any style in a new image, generated in seconds. This is representative of a greater trend, where machine learning models will soon be capable of significantly outperforming and outpacing human workers in most fields except physical labor.

In a worst case scenario, the rise of AI leaves too many people without employment and in poverty, leading to social instability. Mitigating such a scenario is the responsibility of governments. One potential solution that has been proposed is universal basic income (UBI). It was popularized in America by 2020 presidential candidate Andrew Yang, who campaigned on the Freedom Dividend, a UBI that would guarantee every adult citizen $1000 per month. Proponents of the idea claim that it will reduce poverty and allow people to do more meaningful work, while opponents argue that it would promote laziness and present too much of a financial burden on the government.

Existential Threat

In addition to job security, there is also a potential existential threat posed by advanced artificial intelligence to humanity. Consider the way you interact with animals of lesser intelligence, such as fish or bugs. Humans typically ignore these animals, but will not hesitate to hunt fish or kill bothersome flies. While scenarios with evil death robots similar to those shown in the Terminator are unrealistic, more intelligent AI does pose a potential threat to our species.

Since neural networks consist of layers of nodes that imitate the neurons in a human brain, observers cannot know exactly what a model is thinking. This is called the black box problem, where outside observers of a ML model can only view the inputs and outputs. One implication of this is, as new models are trained on increasingly more parameters, they exhibit emergent abilities, skills that they rapidly become capable of at certain parameter counts.

With the inevitability of machine learning models that surpass humans in intelligence and skills, it is important to make sure that they do not commit any atrocities. However, that is easier said than done, as bad actors could weaponize them to produce horrible outcomes. Sam Altman, CEO of OpenAI, is a major proponent of regulating access to the emerging technology, having met with many politicians around the world in discussions on the issue. Critics of Altman, however, claim that he is only trying to restrict competition. Another important aspect of regulation is that an AI arms race pressures nations to avoid regulating their industry in fear of being outcompeted by adversaries.

Increasingly advanced artificial intelligence has the potential to reshape our society for the better or worse. To ensure a positive outcome for our species, governments are looking to involve themselves in regulating AI fields. But what form that should take, and how it should avoid leaving their country at a competitive disadvantage, are still very much undecided.

Consider the way you interact with animals of lesser intelligence, such as fish or bugs. Humans typically ignore these animals, but will not hesitate to hunt fish or kill bothersome flies.

Asa Paparo is the Student Director of Internet Technology for the Bronx Science Yearbook Observatory, as well as a Chief Graphic Designer and Academics...