The Lethal Legacy of Uranium Mining in America

In the twentieth century, uranium mining prospects were many and safety regulations were few.

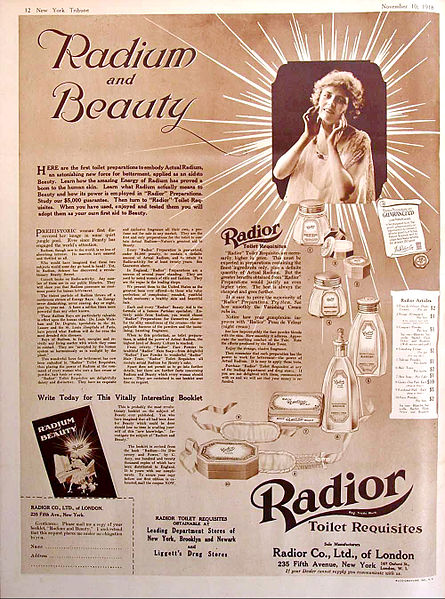

Radior cosmetics, Public domain, via Wikimedia Commons

Radium, one of the deadliest fads of the twentieth century, could once be found in almost any product.

At the dinner table of a 1950’s uranium prospector, the Atomic Energy Commission would have been a household name. From fixed rates on ore and discovery bonuses, the AEC proposed a new way to make money quickly: uranium mining. Over the decade, thousands of prospectors flocked to Colorado fueled by fixed rates on ore and discovery bonuses.

Created under Truman’s 1945-1953 presidency, the AEC could be interpreted as an overzealous example of laissez-faire economics; from the government’s standpoint, more interference meant more funds to be allocated. The AEC commercialized atomic energy and practically put the production of the atomic bomb in the hands of AEC employees, while also making acquiring uranium cheaper. Their primary concern lay in securing the future of uranium production. Therefore, making known the uranium-specific hazards of mining, while being beneficial to workers, would have been counterproductive to its goals.

For decades, radiation was a developing concept that had formed a reputation of novelty, rather than danger. Beginning in 1896, scientist Henri Becquerel discovered naturally occurring radiation from the seeds of a failed experiment and an incorrect thesis. “Before people started to fear radioactivity,” said Timothy J. Jorgensen, professor of radiation medicine at Georgetown University, “all they seemed to know about it was that it contained energy.”

Research in radioactivity continued, but not primarily surrounding its dangers. Given time and tons of pitchblende, a common uranium ore, Marie Curie discovered radium, now considered one of the most lethal ‘daughter’ products of uranium created by radioactive decay and almost a million times more radioactive than uranium itself. As radium decays, it releases ionizing radiation as a by-product, which alters DNA structure and causes cell death and inhibits their ability to reproduce.

But marketing reached radium before uncertainty did. Curie quickly recognized the danger in the widespread use of radioactive elements even though she did not understand most of their effects. The Curies had neither the time nor the money to patent their discovery made in 1898, which ultimately allowed radium to be established in commonplace items. For Marie Curie, decades of carrying tubes of radium in her pockets and uncontrolled exposure to the resulting ionizing radiation contributed to her death from aplastic anemia at the age of 66. Decades later in 1927, scientist Hermann Joseph Meller would link radiation exposure to an increased risk of cancer and genetic mutations.

At the start of the 20th century, radioactivity and its novelty had the consumer market in a death grip. Radium could be found in nearly every product, and where it wasn’t, advertising falsely claimed its presence to encourage sales. Radium dissolved in water was sold as the medicine Radithor; radium toothpaste and suppositories flew off the shelves as soon as they hit them. It took several years – during which time conditions such as bone necrosis and ‘Radithor jaw,’ or the detachment of the entire jaw due to ingestion of radium became commonplace – for the legal system to catch up. The 1938 Food, Drug, and Cosmetic Act was passed soon after, but it only went as far as to ban false advertising.

Altogether, safety precautions regarding uranium were practically nonexistent, and the Midwest’s uranium boom continued for decades after. Spurred on by the looming threat of the Cold War, uranium wasn’t just excavated from the heartland of America, but imported from mines in the Congo as well. In the Navajo Nation alone, almost four million tons of uranium were mined from 1944-1989.

The Midwest’s uranium mining belt, or the strip of land mined for its wealth of uranium, also coincided with the largest Native American reserve, the Navajo Nation. There, a term for radiation and its consequences did not exist in their language at the time. Workplace regulations were few and far between; by 1986, as many as 5,000 Navajo people worked the uranium mines on their land, unknowingly exposing themselves to lung cancer and renal failure. Access to clean water was limited, and uranium mill spills like Church Rock further contaminated groundwater and the local Puerco River. Today, even in the least contaminated areas of the reservation, the uranium content is still three times that of the EPA declared safe amount of 30 micrograms uranium present in water.

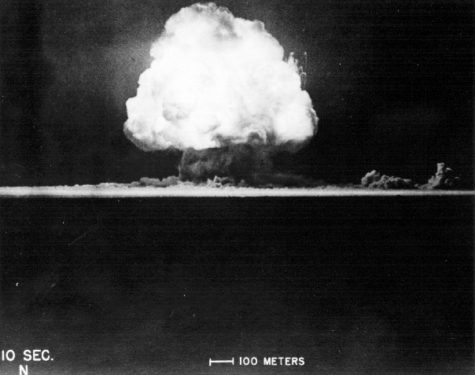

Large elements like uranium lay the foundation for a nuclear explosion. Uranium belongs to a category of heavy elements that undergo nuclear fission without outside interference. Fission, the process in which neutrons are forced out of the nucleus of an atom, produces immense amounts of heat and energy. In a nuclear reactor, neutrons loosened by head-on collisions with atoms turned the naturally occurring uranium-238 into uranium-239, which rapidly decayed into plutonium-239. Plutonium, non-naturally occurring and highly prone to undergoing fission without interference, was perfect for the core of the first nuclear bomb, Trinity. Approximately 6 kilograms of plutonium was used in the core of the Trinity bomb. Detonated explosives compressed the sphere of plutonium enough for it to reach critical mass, allowing it to maintain the nuclear chain reaction. On July 16, 1945, Trinity detonated over the Jornada del Muerto desert in New Mexico.

Trinity’s hidden success — disguised as a mistaken detonation of high-grade explosives — was kept secret until the bombing of Hiroshima three weeks later. After that, a craze for uranium set in, aptly named ‘uranium fever.’ Governments, prime amongst them the United States, rushed to procure the metal, which led the AEC to declare uranium mining a free enterprise. For thousands of prospectors, the prospect of wealth was all that was needed to flock to the four corners of Colorado in search of uranium.

In the 1950’s, health concerns surrounding uranium miners and their tendency to develop lung cancer pushed the U.S. Public Health Service — as well as the AEC — to begin conducting the first of several mortality studies. The results showed that the miners were dying of certain diseases, such as tuberculosis and emphysema, at an abnormally high rate. Yet even as the studies meant to associate death with decades of unregulated uranium mining, concern was minimal, and miners were not notified of the risks they undertook.

“These are not invisible rays, they are materials. They get into our water, our food, and the air we breathe,” said Gordon Edwards, co-founder of the Canadian Coalition for Nuclear Responsibility and nuclear consultant. “We do not want to permanently increase our radiation levels on this planet. We have enough problems already.”

“These are not invisible rays, they are materials. They get into our water, our food, and the air we breathe,” said Gordon Edwards, co-founder of the Canadian Coalition for Nuclear Responsibility and nuclear consultant. “We do not want to permanently increase our radiation levels on this planet. We have enough problems already.”

Marina Tiligadas is a Staff Reporter for 'The Science Survey' where she seeks to inform and spread awareness of current events. Outside of school, Marina...