If, If not, Elif, Else, and While. Strings, Arrays, and Booleans. Python, Java, and Javascript. Together they are part of the study of computer science, a study that serves as the heart of the digital age. Today, computer science is the hot topic with more than 600,000 people majoring in it in the United States as of 2023 alone, a 40% increase compared to the previous year! But why? Why is everybody trying to major in this field? What caused computer science to become what it’s like today? Well, to find out, we first have to understand the history of computers – the machines that the study of computer science are based upon – from the start.

Innovation: The start of a new era

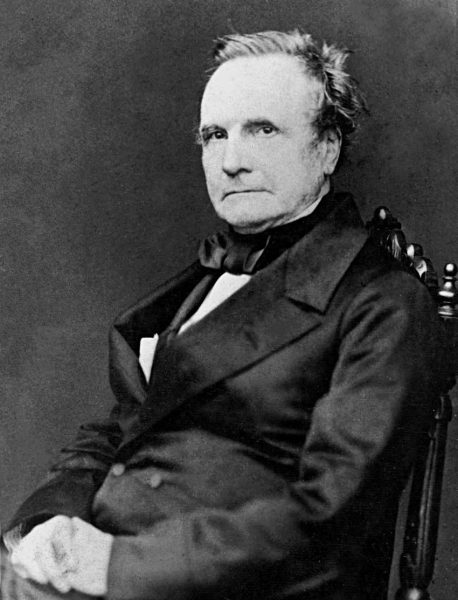

A problem that many mathematicians faced in the 19th century was the mass calculation involved in proving theories. At the time, equipment like the Abacus, slide rulers, and other similar instruments that were used before were outdated. This meant that new technological advancement was needed for instruments, in order to make rapid and accurate calculations. To cope with this, mathematicians began to develop a series of machines that are known as the early calculators. Among these, the most famous is the Analytical Engine proposed by Charles Babbage. This machine successfully allowed the calculation to be much faster by performing tabulate logarithms and trigonometric functions through evaluating finite differences to create approximating polynomials. One fun fact is that its user Ada Lovelace was even described as the first computer programmer for her work in calculating Bernoulli numbers using the machine.

Later on, key figures like Sir William Thompson and Vannevar Bush, who designed the tide predicting machine and differential analyzer respectively, which helped mathematicians to solve differential equations. These inventions proved to be a big success in the mathematical field. Thanks to them, analog computers, machines that manipulate electric, mechanical, or hydraulic signals, were developed. They were faster and, in a way, easier to use compared to their past counterparts. By 1929, the analog computer AC network analyzers were developed in order to handle problems too large for analytic methods or hand computation. These machines were able to solve problems in nuclear physics and in the design of structures. Thanks to this feature, the use of analog computers reached its peak during World War II, as members of the armed forces used them to help calculate the trajectory of bombs and the position of the enemy. Notable examples are gun directors and bomb sights, with the former used to help calculate position of a moving target, and the latter calculating the bombing position for aircrafts. As time went on, the discovery and use of electricity helped analog computers to further develop into electro-analog computers. Through the manipulation of the electrical circuit, a potential difference in voltage is produced, and through it, a wide variety of mathematical operations, including summation, inversion, integration, and differentiation, could be carried out.

The computer that we use today is developed from the electric analog computer, where engineers like John Vincent Atanasoff were known for developing the first digital computer. In his prototype, he used capacitors to store data in binary form and electronic logic circuits to perform addition and subtraction. His goal was to limit the flaws in analog computers, so he computed with direct logical action rather than enumeration, which would give it increased accuracy. There we have it. This is a transition from analog computers to electrical digital computers.

What about Computer Science?

Okay, so we have a computer now. But, I thought we were talking about computer science rather than computers? Now is when the story really begins. The development of computer science occurred alongside the invention of computers. While Charles Babbage and other engineers were developing the first computer, the fundamental theories of computer science such as information theory were also being developed. A notable example is Boolean Algebra, developed by George Boole in 1847. Boolean algebra consists of three basic operations: conjunction, disjunction, and negation. His work consists of operating on only 0 and 1, which is why we see most movies depicting hackers with a bunch of 0 and 1 as codes. There are also three operators: AND(^), OR(∨), and NOT(¬). There we have the basics of a Boolean Algebra, and together with the Monotone laws and Nonmonotone laws, we have the most basic form of codes. In these rules, x and y were given a number, either 0 or 1, and each x and y represents a different meaning. For example, if x AND y = 0 while 1 means x OR y, we can then put a series of 0 and 1 in the machine to tell the machine whether x is true, y is true, or both. This is similar to the ‘if and what if, else statement’ in coding today.

Later on, Alan Turing’s idea of the Turing machine was another crucial development toward computer science we see it today. Turing proposed the idea of a CPU, giving a command center for the computer. This is very important as now we can give out a series of simplex instructions to the computer what to do. This provides the idea that numbers can be stored in storage immediately after it is calculated — by itself, instead of someone asking it to do it, manually. These new theories that were developed from Alan Turing, John von Neumann, and many others, allowed computer science to develop alongside computers, shaping it into what we see today.

The development of computer science

Based on theory, the first actual programming language, called Plankalkül, was developed in the 1940s by Konrad Zuse. However, it worked very differently from the coding language that we used today. The concept of cryptography influenced by World War II was later included in languages like C and C-. Other theories like computational complexity theory, known with Turing’s Turing machine, are also added over time by coding languages that were developed later. As time goes on, we are moving away from the language that Zuse invented, and the practical limits on what computers can and cannot do have now changed, setting different boundaries and rules for the code.

Timeline

In the 1960s new kinds of programming languages were developed: COBOL (Common Business-Oriented Language), BASIC (Beginners All-Purpose Symbolic Instruction Code), and ALGOL (Algorithmic Language), which made programming more accessible to beginners, as they were more easy to understand compared to Plankalkül.

The 1970’s introduced languages that would become cornerstones of modern programming, including C and Pascal. The language C, developed by Dennis Ritchie at Bell Labs in 1972, was designed for system programming and writing operating systems. Their operating system UNIX became the base for modern operating systems. More recent language like C-, C++, java and C# were all based on language C. Pascal was developed around 1970, by Niklaus Wirth of Switzerland, in order to teach structured programming, emphasizes the orderly use of conditional and loop control structures without GOTO statements. The language Pascal is a combination of ALGOL, COBAL, AND FORTRAN. These languages facilitated the development of robust software and operating systems.

The 1980’s marked the advent of object-oriented programming (OOP) with the creation of Smalltalk. Smalltalk and other paradigms, such as functional programming, introduced concepts such as objects, classes, and inheritance, which allowed for reusable and modular code. Smalltalk is one of the first languages to include the model–view–controller (MVC) pattern, allowing multiple consistent views of the same underlying data. Later languages like C++ and Java all include MVC.

With the rise of the internet in the 1990’s, scripting languages like Perl and Python emerged, catering to web development and automation tasks. These languages offered simplicity and flexibility, enabling rapid development and deployment of web applications. Java, introduced by Sun Microsystems, brought platform independence with its “write once, run anywhere” capability, becoming a staple for web and enterprise applications.

The early 2000’s saw the popularity of languages such as Ruby and PHP, which were known for web development today. Ruby itself gained support as it can allow the usage of multiple programming paradigms, including procedural, object-oriented, and functional programming. Ruby on Rails, a web application framework, revolutionized the web by providing a standardized and organized way to build web apps, making it easier for developers to write clean, maintainable, and scalable code. Meanwhile, PHP became the backbone of many dynamic websites.

Today, programming languages like Java, C++, Python, and JavaScript are widely used across various domains. Java remains a preferred choice for enterprise applications, C++ for system and application software, Python for data science and machine learning, and JavaScript for front-end and back-end web development.

Why Computer Science?

After learning about the history of computer science, a question still needs to be answered. Why computer science? Although modern day computer science is actually very simple compared to its ancient counterparts, it may still be hard to understand for some. There are no definite answers, but one reason might be that it is a transferable skill. Today, we can see the presence of computer science in basically every single field, from data analysis in research, to web design for fashion. Also, another reason for the continued popularity of computer science may be because many high paying jobs need people who have skills in this field. Examples can be seen with data scientists and software developers. The most important reason for the popularity of computer science may be that it is very convenient. You may wonder what I mean by ‘convenient,’ but the answer is simple; you can work at home or anywhere you want. Since computer science only needs you to type in and edit the code online, all you need is a laptop. This makes a difference from other jobs where you have to sit and work in an office, interacting with your peers. Computer science is a field that is still growing and it will probably not stop in the near future. Due to this fact, it is probably important for everyone to learn some skills in computer science when you can, as you never know when you will need it.

Today, we can see the presence of computer science in basically every single field, from data analysis in research, to web design for fashion.