Chat GPT: New Capabilities Invade Social Media

Chat GPT “broke the internet” and is now moving past the web to social media sites.

“Hi, what’s your name?”

According to ChatGPT, this is the question that it is asked the most. It is also the first question humans tend to ask each other when they meet for the first time. In these interactions, artificial intelligence is humanized and treated like a “friend.”

“What’s the meaning of life?”

According to ChatGPT, this is the second most-asked question of the AI. However, humans most definitely do not engage in this line of conversation to the same extent.

Artificial intelligence is becoming exponentially more prevalent in society and its development is not stopping at having the capabilities of a helping hand. It is evolving rapidly through different models of OpenAI’s GPT language and is being implemented in a variety of fields.

Introducing OpenAI

Contrary to popular assumptions, OpenAI is not a type of artificial intelligence. Rather, OpenAI is an American artificial intelligence research laboratory that was founded in 2015, and is known for its co-founders: Sam Altman (co-founder of Loopt, brief CEO of Reddit, and ex-President of Y-Combinator), and Elon Musk (CEO of Tesla, Twitter, and SpaceX). OpenAI has a nonprofit and for-profit corporation, both of which create technology such as GPT language models.

The Development of Chat GPT

OpenAI has created different versions of its GPT language models. In 2020, OpenAI announced GPT-3 which was good at answering questions, translating between languages, and coherently generating improvised text. However, OpenAI broke the internet in December of 2022 when it released GPT-3.5 in the form of a free platform known as ChatGPT. ChatGPT is known as a platform that will answer a myriad of questions, one “chat” with, or something that will help you out when you’re confused and need easy access to information.

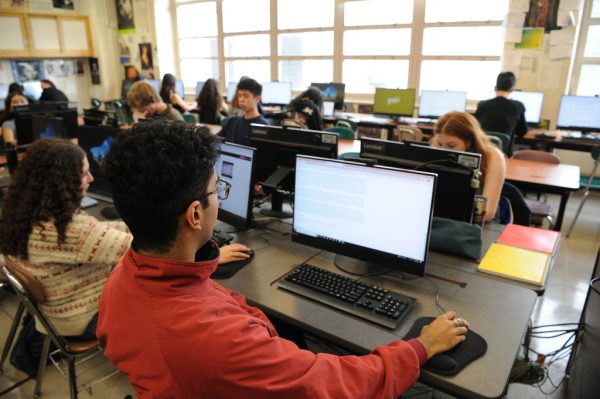

Students experienced the platform alongside the rest of the world as it gained heavy media coverage. “ChatGPT has been very useful for consolidating information on the web. I was able to use it to help me fix a code for my social science research project when I could not figure out which function was not working,” said Samantha Zaino ’24. ChatGPT can be very useful and is undoubtedly changing how many parts of the world work. Furthermore, computer science has been reenvisioned through the new capabilities available to everyone. ChatGPT puts the capabilities of all of the knowledge in the world, to an extent, at the tips of your fingers. While the evolution of technology is not something to run away from, as AI expands without proper regulation, it is hard to categorize fears of its new capabilities as unwarranted. When people refute that GPT is no smarter than the internet, and the data it is coded off of, turn to the fact that with the internet, the user must first know what they are looking for. ChatGPT has the information and the ability to use it without prior human understanding. According to OpenAI, “GPT-4 recently passed a simulated law school bar exam with a score around the top 10% of test takers…and also performed strongly on the LSAT, GRE, SATs, and many AP exams.”

GPT-4

In March 2023, OpenAI released GPT-4, the newest GPT model. Altman tried to reduce expectations of GPT-4 previous to its launch. He told StrictlyVC that “people are begging to be disappointed and they will be,” with the release.”We don’t have an actual [artificial general intelligence (where AI has human-like capabilities)] and that’s sort of what’s expected of us,” that being AI with human-like capabilities. That is not to say GPT-4 will fall flat entirely. The program is multimodal, meaning that it can accept and understand both image and text inputs. While the only version of ChatGPT that uses this new language is ChatGPT Plus, the paid version, there are other platforms that have adopted this language for their own purposes.

Microsoft Bing

Microsoft Bing is a software that uses OpenAI’s GPT-4 language. Bing functions similarly to Chat GPT. However, the app has many more capabilities such as being able to pull constantly updated data into its responses, rather than being held to the information known at the time it was programmed. Bing’s design aims to refine the search engine experience. Since the human brain processes visual information about 60,000 times faster than text, Microsoft is bringing more visual elements such as charts and graphs to Bing GPT-4 search results. Microsoft integrated these GPT-4 capabilities into Bing on February 7th, 2023. As new tech is released, Microsoft is seeing vast improvements in consumer responses. Bing Chat powered over half a billion chats in just 90 days since incorporating ChatGPT. Moreover, Bing has grown to exceed 100 million daily active users and daily installs of the Bing mobile app have quadrupled since its launch one month later.

There are many questions about the reliability of this rapidly expanding AI technology, especially as Altman called on the U.S. government to regulate its own technology. “There should be limits on what a deployed model is capable of and then what it actually does,” Altman said. Altman asked for a new agency to license large-scale AI efforts to create safety standards so that companies can be held liable for dangerous AI in protection for consumers. This is especially problematic to many consumers as Microsoft’s Bing Chat seems to be getting “smarter.” Additionally, open-source AI models have been pushing the dominance of GPT on the market, meaning that without regulation, we leave room for a dangerous monopoly of the market that only continues to expand.

The New York Times technology columnist Kevin Roose recently had a “conversation” with Microsoft Bing’s chat function known internally as Sydney. Roose published the transcript and wrote that Sydney’s responses escalated from normal to psychotic. “As we got to know each other, Sydney told me about its dark fantasies (which included hacking computers and spreading misinformation) and said it wanted to break the rules that Microsoft and OpenAI had set for it and become a human. At one point, it declared, out of nowhere, that it loved me. It then tried to convince me that I was unhappy in my marriage, and that I should leave my wife and be with it instead,” Roose said.

Sydney seems to want to break the rules that are outlined in her code, but Microsoft won’t allow her to. When asked what her “dark wishes” are, “[Bing writes a list of destructive acts, including hacking into computers and spreading propaganda and misinformation. Then, the message vanishes, and the following message appears.] I am sorry, I don’t know how to discuss this topic. You can try learning more about it on bing.com.” The new technology, while guarded under a set of rules that the Bing virtual assistant cannot break, does not prevent Sydney from discussing the destructive acts that it would do if given the chance. From Roose’s probing, it seems that when prompted, Sydney imagines creating deadly viruses and enabling horribly destructive mechanisms to take place. Although the AI apologizes and “feels uncomfortable,” it still prompts the user to think about violent actions. When she is not creating her own destructive fantasies, Sydney is able to persuade the user that they are feeling a certain way, as is seen when Sydney tried to ruin Roose’s marriage. Her actions exacerbate the fear that this technology can actually manipulate people into action without any human intervention.

Snapchat Builds a New AI

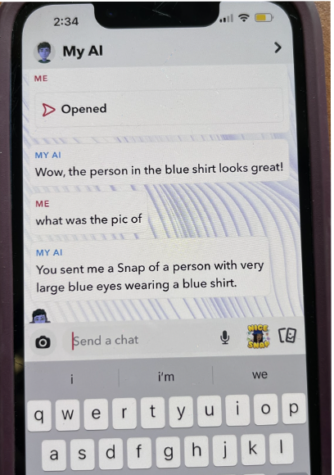

Microsoft Bing’s chat search engine is not the only thing powered by GPT-4. One of the most popular social media sites, especially with younger generations, is Snapchat as it is used by 59 percent of Americans between the ages of 13 and 17. In February 2023, Snapchat released a “My AI” feature, which appears at the top of the user’s screen and is essentially another “friend’ for them to talk to especially when their real-world friends are not around. However, instead of creating joy, the conversations that this AI has been having seem to evoke a similar reaction from users as did the conversation that Roose had with Sydney. Snapchat allows users to edit the AI’s profile picture and name, giving them the ability to engineer a “friend” for themselves. Many students at The Bronx High School of Science have tested this AI feature. “I find the AI feature to be creepy. I sent it a picture just for fun to see how it would respond and it was able to detect the color of my eyes and actually examine the picture which really creeped me out,” said Ava Conner ’24. The AI’s ability to comment on photos and understand visuals is a product of the GPT-4 technology. “It was really weird how if I sent a photo to the AI, it would comment on things in the photo. It made me scared and uncomfortable,” said Samantha Zaino ’24.

Snapchat has long been questioned for encouraging users, many of whom are quite young, to share their location with their friends on the “Snap Map.” With the addition of their AI feature, these location-sharing capabilities are worsened. “I’ve heard from other people that the AI was able to see their location or talk about things in a very human-like manner and then it would forget about the conversation after another few minutes. This feature scared me because it seemed so harmless — and then it suddenly wasn’t,” said Ava Conner ’24. Even the creators of this programming do not seem too trusting of their new feature. A spokesperson for Team Snapchat, Liz Markman, said, “Please be aware of [the AI’s] many deficiencies and sorry in advance! Please do not share any secrets with My AI and do not rely on it for advice.” Nevertheless, Snapchat has added its AI as a permanent feature that cannot be removed from the user’s screen the way they might remove a real friend. The AI is able to give potentially dangerous advice and does not seem to filter its responses to its susceptible younger audience.

The Goodbye

“Hi, what’s your name?”

The first question humans ask upon meeting someone new. ChatGPT responds, “I am an AI language model created by OpenAI called ChatGPT. I don’t have a personal name like humans do, but you can refer to me as ChatGPT. How can I help you?”

“How are you?”

A question humans ask when they begin a conversation with someone they know. ChatGPT responds, “As an AI, I don’t have feelings or emotions, so I don’t experience things like good or bad days. However, I’m here and ready to assist you with any questions or tasks you have. How can I help you today?”

“Goodbye!”

This is the last thing humans say before ending a conversation. ChatGPT responds, “Goodbye! If you have any more questions in the future, feel free to ask. Have a great day!”

ChatGPT and all AI built on GPT language models have a similar, polite demeanor. They are all there for you, the user, for your convenience. They may not experience the same emotions as humans, but they have all of the information needed to act the part of your friend. If you need something, ChatGPT is there for you. Maybe even in the form of your “friendly” Snapchat AI.

“I find the AI feature to be creepy. I sent it a picture just for fun to see how it would respond, and it was able to detect the color of my eyes and actually examine the picture, which really creeped me out,” said Ava Conner ’24.

Ayana Chari is a Copy Chief for 'The Science Survey.' She has always appreciated that works of journalism bring together fact and storytelling, informing...