The War Between Artificial Intelligence and Detection Software

As language models like ChatGPT continue to grow proficient in their responses, the tools for their detection become increasingly intelligent. But what does this mean for their users?

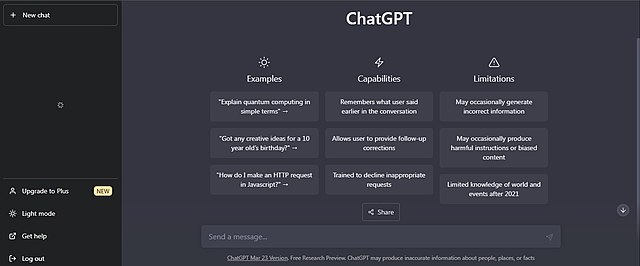

Aaqib Gondal (Screenshot from ChatGPT)

This is the basic, yet effective user interface for ChatGPT as of May 2023.

“Rewrite the Declaration of Independence to make it sound like a song written by Nirvana.” “Reformat this entire document by adding an adjective at the beginning of each sentence.” “Give me a step-by-step guide to the process of soapmaking and how I can start a business with it from home. Now explain where and how I should invest the revenue of that business.” “Please write an article on artificial intelligence for me.”

A few years ago, the responses to these prompts were only attainable through human efforts and some slight assistance from a search engine. Now, you can have practically any answer you desire delivered to your screen in mere seconds, thanks to the groundbreaking language model of ChatGPT.

You’ve likely already heard of ChatGPT, as it has gained worldwide popularity as a digital tool that can give you an answer to anything you type into its message box. From homework answers, code systems, or e-mail compositions, it seems like the abilities of this new artificial intelligence are endless. But how exactly does it work, and why are there now new technologies working against it?

I previously used the term “language model” while discussing the convenience of ChatGPT. Language models are a branch of computer programs that are designed to both understand and emulate the communication patterns of human beings. They acquire this information through training by computer scientists who provide it with massive assortments of text from books, online articles, and interactions between people on the internet. In fact, GPT stands for “Generative Pre-trained Transformer.” For the development of ChatGPT, it is being continuously trained by the bright minds at OpenAI, an American artificial intelligence research laboratory.

Next comes the inputs of the user. When someone types a prompt or question into ChatGPT, it utilizes all the information made available by those aforementioned references of human communication, in order to properly recognize what was asked. Finally, a response is generated to be as relevant as possible to the user’s input. It’s not always perfect, as shown by critics who claim that the language model struggles with mathematical inputs. It’s pretty ironic that some of our most advanced computer technologies are also having a really hard time with calculus questions.

The current version of ChatGPT uses GPT-4, is the most up-to-date and fine-tuned version of this artificial intelligence. GPT-4 was specifically designed to generate responses that were more conversational in nature, demonstrating just how skilled this language model has become at replicating human communication since its inception in 2018.

With such an impressive and previously idyllic tool being available to the public, its utilization in a variety of career fields is no surprise. Software developers can use it to test conversational applications like other chatbots and virtual assistants. It can be used in medicine to answer questions related to general health or case studies. While these are objectively beneficial ways to put a new and developing technology to the test, there’s one environment where ChatGPT’s utilization is undeniably controversial: the classroom.

Of all the different career fields which benefit from ChatGPT’s mass availability, its largest demographic is undoubtedly the students of the world. When given an opportunity to lighten their workload and alleviate some of the difficulties of school, they’ll seize it. Of course, there’s a dramatic difference between lightening your workload and flat-out cheating. This is where the subject of language models becomes much more contentious in nature.

For example, a student has a lengthy research paper due the next day that they haven’t started. Despite the short time frame, they have a finished product that they’re more than satisfied with. Their research paper has quotes, citations, a particular format, and a dense list of resources. However, the student did not write a single original word on the entire document, instead assigning a few prompts to a language model and sitting back as the AI generated a completed product for them.

When discussing the prospects of GPT utilization in classrooms, critics often jump straight to the different ways in which students can use this new tool to cheat on virtually any assignment. It could be used to solve equations (to varying levels of accuracy), write reports, complete analytical papers, quote books, and essentially anything else that would be the responsibility of a student. That is exactly what we’ve seen since ChatGPT’s surge in popularity in mid-2022. Many students of Bronx Science can even attest to how difficult it’s become NOT to use AI when an assignment simply becomes a burden.

“ChatGPT is nothing like students have ever seen before. It’s so convenient to instantly have a response, which has always been a problem when searching for a specific homework question. It’s not really surprising at all that students use it for everything now, and I don’t think the problem of plagiarism with AI is ever really going to be fixed,” said Urooj Ahmad, a junior at Brooklyn Technical High School.

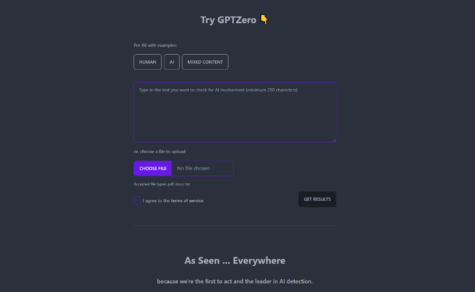

With this new threat to academic integrity on the rise, some have decided to take matters into their own hands. Edward Tian of Princeton University made headlines a few months ago for his invention of GPTZero, an AI detection software that weeds out any excerpts of a document that may have been synthesized by a language model.

GPTZero utilizes two unique indicators called “perplexity” and “burstiness.”

Perplexity refers to how complex and uniquely characterized a text may seem. Human-made text often holds a higher degree of complexity than the product of a language model, which is usually unnaturally flawless in its presentation. Human writing is also usually quite reflective of the nature and personality of its writer, which is something language models aspire towards.

Burstiness refers to the formats of specific sentences as well as the variation of their length. Human writing is characteristically varied in this regard, with some sentences being layered and convoluted, whilst others may be less than 7-10 words. Language models are often very uniform in their sentence length with a lack of any real variation.

It’s worth noting that similar to ChatGPT, GPTZero is in no way flawless. It’s very common for human-made text to be flagged as AI-generated simply because it does not adhere to these rules of perplexity and burstiness. Humans write in all different forms because we all hold different perspectives on what good writing should look and sound like.

Unfortunately for some students, their idea of good writing is exactly the opposite of what these detection tools believe to be reflections of humanity. And with teachers and school staff on edge about the validity of what students are now submitting, GPTZero seems to be growing side by side with ChatGPT, despite being released only a few months ago.

This ongoing clash between the advancement of language models and the need for tools that actively work against their utilization comes with a growing stigma about AI implementation in education.

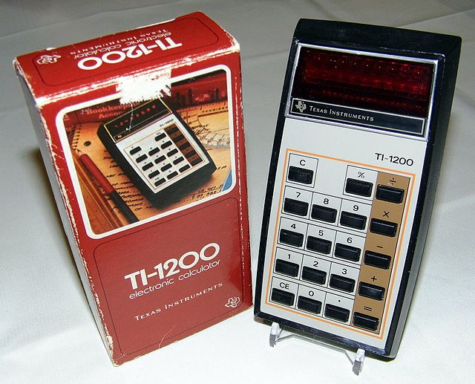

In 1967, Texas Instruments released a product that would change the world of mathematics and education forever: the handheld calculator. While this invention was revolutionary at the time, it wasn’t instantly praised or accepted by schools and teachers. In fact, there was a lengthy period of debate regarding the effect of calculators on student learning.

Many skeptics like New York University Professor Morine Kline felt that the eventual reliance that students would develop toward the need for calculators was simply far too detrimental. Others, like Rutgers University Professor Joseph G. Rosenstein, believed that students would lose a fundamental ability to grasp basic mathematical concepts if calculators were to be widely integrated into classrooms.

If you’ve stepped foot in a math classroom in the last 20 years, you’ll find that calculators are now a necessary tool for students to expand their understanding of math as well as improve their efficiency in problem-solving. Calculators no longer pose any sort of threat to the world of learning because the unnecessary discourse toward their nature has eventually ceased to exist.

Of course, calculators themselves have continued to become much more advanced, which only benefits the students as it allows them to explore even further into what’s possible with mathematics.

In the end, if we want to help our students continue to understand and keep up with their expanding education, we need to allow them to utilize every resource available. Even if this battle between the need to detect language models continues, OpenAI will only continue to make ChatGPT more intelligent and reflective of how we humans communicate.

It’s impossible to prevent every student across the world from using artificial intelligence in their academic endeavors. As time goes on, it will become increasingly accessible and capable of answering any question with near-perfect accuracy. Once we remove the stigma that language models are solely resources for students to cheat, only then will we be able to perfectly integrate artificial intelligence into classrooms.

In order to tighten the trust between educators and students in regards to AI, Google Docs and other writing composition tools should adapt around language models via the creation and promotion of their own built-in AI detection software. GPTZero is an incredibly impressive tool, regardless of its current flaws. Its technology should continue to be improved upon until we can see both language models and detectors working side-by-side for the benefit of the young scholars of the future.

Even if this battle between the need to detect language models continues, OpenAI will only continue to make ChatGPT more intelligent and reflective of how we humans communicate.

Aaqib Gondal is a Copy Chief for ‘The Science Survey,' where he is responsible for the review and revision of different pieces written by his classmates....