The Bad Bunny Blunder: The Grammys’ Misstep and How It Fits Into the History of Captioning

What does The Grammys’ mistake during Bad Bunny’s set say about the Academy and captioning as a whole?

Tiago Gomes Music, CC BY-SA 4.0

Pictured is Tiago Gomes winning the Latin Grammy (the Latin counterpart to the Grammys) for Best Latin Jazz Album in 2018. Bad Bunny won the Grammy for Best Música Urbana Album, a category which the Grammys introduced in 2022.

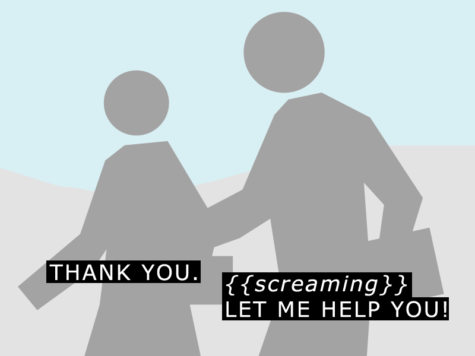

On February 5th, 2023, Bad Bunny gave an acceptance speech at the Grammys for his album ‘Un Verano Sin Ti, ’ which has garnered over 4.5 billion streams in the U.S. alone. With Bad Bunny’s mass appeal and widespread popularity, his nomination and subsequent win came as no surprise. So when he started off the ceremony with a mashup of “El Apagón” and “Después de la Playa,” and live captions of his performance read [SINGING IN NON-ENGLISH], and subsequently [SPEAKING NON-ENGLISH] during his acceptance speech, it came as an insult to many. As one of the most popular artists in the world, nominated in a category made up of mostly Latin artists, the Recording Academy’s lack of foresight in providing a Spanish live captioner is both a shock and an insult to his Spanish-speaking fans.

Of those insulted was Representative Robert Garcia, a Peruvian immigrant representing California’s 42nd congressional district. He wrote an open letter to Recording Academy CEO George Cheeks, demanding that he and the academy, “take serious measures to address the failures which made this mistake possible.” Garcia said that he felt personally offended by this misstep, and called on the academy to address their failures and rectify their mistakes.

Seeing the chaos he caused, Cheeks responded with a letter of his own, saying, “Regrettably, errors were made with respect to the closed captioning of his performance and subsequent acceptance speech. We worked with a closed captioning vendor that did not execute at a standard to which we should rightfully be held. Regardless, we should have monitored the situation more closely. A bilingual (English and Spanish-language) real-time live captioner should have been utilized and the words used on the screen were insensitive to many.” He took some accountability but nevertheless tried to obfuscate the criticism, shifting some blame onto the captioning agency, CaptioningStar.

CaptioningStar is one of the largest captioning agencies with 25-200 employees and a revenue of $6.2 million. They work with many of the top 100 Fortune companies, such as Google and Amazon, as well as many broadcasting networks, including not only the Grammys but also CNBC and Fox News.

But how did captioning come to be so widespread that one can find it not only in these top 100 companies, but almost every company around the world? In the fight for inclusivity, captioning has slowly become more and more ingrained in broadcasts, either live or recorded, to the point that nowadays, it is hard to find one without.

Before explaining the history of closed captioning, it is important to understand the difference between subtitles, or open captioning, and closed captioning. Subtitles are used for mass audiences and are generally implemented in foreign films, for instance, to convey important information to the audience from characters speaking another language.

Subtitles assume that the viewer can hear the background audio and, as a result, do not include any other information besides the dialogue. However, before movies had sound, subtitles were used to communicate expository information in silent films, implemented as early as the 1920s. One method of this was intertitles, in which text was displayed on screen, but not integrated into the scene. Such can be seen in this video. Subtitles existed long before closed captions, as they did not provide viewers the choice of whether or not to see them, requiring less complex technology. These days, the use of closed captioning is purely at the viewer’s discretion.

Closed captioning is made for deaf and hard-of-hearing audiences, enabling them to understand what is happening on screen that they can’t hear. Thus, it generally entails not only a transcription of the dialogue but also any context lost from a lack of audio. In TV shows and movies, it often includes other pertinent information, such as the person speaking, if not immediately made clear from the visuals of the scene. It is also used to convey other auditory cues, such as a character sighing or the tone of the music played in the background in order to enrich the viewing experience.

However, closed captions have not been around forever. The idea for implementing closed captioning in television broadcasts was developed in 1970, when the National Bureau of Standards and ABC experimented with displaying a time code on televisions, using a decoder in the television set to recover and display the time. While this idea failed, it gave them the idea to implement captioning in television programs. With this technology in mind, the NBS and ABC began to develop new technology which would display captions that would be available on all devices.

In 1972, ‘The French Chef’ with Julia Child was the first T.V. show nationally aired with open captioning, meaning it was automatically displayed to everyone who watched and it could not be turned off individually. This technology was then further developed to be available on demand, using a decoder in the television set.

The technology involved takes written captions and converts them into electronic code which is displayed on a specific, previously unused television channel, which can be accessed with the decoder, similar to the technology to attempt to display the time code. This captioning continued to be refined, through experiments funded by the Department of Health, Education and Welfare, but it was clear more needed to be done, and receiving cooperation from major networks required a proper non-profit with a specific mission.

Thus, in 1979, the Department of Health, Education, and Welfare established the National Captioning Institute (NCI) with the mission of ensuring that “deaf and hard-of-hearing people have access to television’s entertainment and news through the technology of closed captioning.” They facilitated the growth of closed captioning and in 1980, ABC, NBC, and PBS began transmitting captions on select programming, and started selling the decoders publicly.

After closed captioning had proven successful, the NCI campaigned for real-time captions, providing the first real-time captioning to the live broadcast of the Academy Awards in 1982. They hired court stenographers as their real-time captioners, a practice still used today. These court stenographers are trained to type at speeds of 180 to 225 words per minute with a high rate of accuracy, a feat unfathomable with our standard QWERTY keyboard. Captioners and court reporters use a different keyboard, a stenotype machine, which uses a different layout that is phonetic based, allowing the captioner to transcribe words much faster and more efficiently. This is then processed through the computer and transmitted to screens all around the world as the program is broadcasted.

The real-time captioning of the Academy Awards was only the start of a tidal wave that brought us to where we are today. ‘World News Tonight’ became the first regularly scheduled broadcast with real-time captioning later that year. Nowadays, the overwhelming majority of publicly broadcasted programs include captions, real-time or closed captions, a stipulation even codified into law with the 21st Century Communications and Video Accessibility Act in particular, which made closed captions a legal requirement.

It is fitting, then, that the four major entertainment award shows in America, where some of the first strides in accessibility were made, have failed to keep up and have now fallen behind. The Grammys’ inability to provide accurate, bilingual translations proves a major lack of foresight and disrespect for the millions of Spanish-speaking Americans who only communicate in the United States’ second most prominent language.

The Grammys also works with CaptioningStar, which claims its mission is to provide captioning and other accessibility features to make it available to a much wider array of people. Because, as their website says, “Accessibility is inclusivity.” Their website also says that they provide a variety of accessibility services in a wide array of languages, one of which is Spanish. However, neither party did their due diligence, and the appropriate level of captioning accessibility was not provided. For a company championing accessibility and stressing the importance of inclusivity in accessibility, they fell flat. The appropriate standard of care was not taken.

According to Lee Bursten, a real-time captioner for VITAC, another major captioning agency, there is a lot of research involved whenever he captions award shows to ensure he is on top of what is going on in the “involved and hectic experience” that is award shows, to make the captioning process as efficient as possible. As Bursten told VITAC, “When prepping for awards shows, the first thing I do is Google the show name and try to find lists of the categories, nominees, and presenters.” He inputs all relevant names and any other unique, necessary information, and creates shorthands to get familiar with them, making sure he can write them down. But that is not the only information he has access to. Most of the broadcast is prescripted and given to the captioner beforehand, which they can feed to the stenotype machine without any manual labor required from the captioners.

Oftentimes, however, award shows do not go as planned, with hosts adding in ad-libs and producers cutting entire sections due to lack of time. Award shows, especially, tend to have differences, as all aspects are fine-tuned until the very last minute, leading to discrepancies between the prewritten words and what is actually said, meaning the captioner has to be fully attentive the entire time, ready to type the words manually as needed.

When talking about the prepared script and the behind-the-scenes preparation for real-time captioning, Bursten said, “Usually before the show I’ll receive a fully prepared and formatted script from the production folks at VITAC HQ. Having the script is great because it means I can simply feed the lines of script without having to write on my steno machine.”

Bursten continued, “The big caveat here is that the script won’t necessarily reflect exactly what happens on the live show, so I need to be ready to write on my steno machine at any moment, if they deviate from the script. For one thing, the script will say, ‘And the winner is –’ and then leave a blank, since obviously our client doesn’t yet know, at the time they send the script, who the winner will be. For that reason alone, I still need to do a full prep so that I can write the name of the winner as it’s said live. Also, of course, thank-you speeches by the winners won’t be in the script so I’ll need to write those live as well.”

This level of preparation is required of real-time captioners, so why is the same, if not a higher standard of preparation expected of the Recording Academy and CaptioningStar? Both parties were aware of Bad Bunny’s nomination, and yet none thought to provide bilingual captioners to make one of the largest entertainment broadcasts accessible to more people.

This shows a massive lack of care on both ends, which hopefully both parties have learned from. Though they have a long way to go, the Grammys has made significant strides in accessibility over the years, making 2022 the first year that audio description, describing the visual scene through purely audio for blind and visually impaired consumers, was provided. It is clear the level of accessibility has increased significantly through the years, but there is always more to do. Perhaps, using bilingual captioners, especially when it is known to be needed in advance, is the next step, but it is a small one. But progress often happens piece by piece, made up of tiny, little steps.

According to Lee Bursten, a real-time captioner for VITAC, another major captioning agency, “The big caveat here is that the script won’t necessarily reflect exactly what happens on the live show, so I need to be ready to write on my steno machine at any moment, if they deviate from the script. For one thing, the script will say, ‘And the winner is –’ and then leave a blank, since obviously our client doesn’t yet know, at the time they send the script, who the winner will be. For that reason alone, I still need to do a full prep so that I can write the name of the winner as it’s said live.”

Yardena Franklin is a Graphic Designer and Copy Chief for ‘The Observatory’ yearbook, as well as a Staff Reporter for ‘The Science Survey’ newspaper....