The Digital Age and the Two Futures We May Inherit

Imagining two possible destinies of the human race – reinforced by optimism and Artificial Intelligence.

The uncertain fruits of a digital age may yield two eligible forms of AI utopia; ergo… a fork in the road.

When speaking in future terms, humans have often resorted to words utopia and dystopia to express their existential critique of the powers that be.

But these are always fantasies; overenthusiastic assumptions on power that never materialize. Let me cite some examples: Lenin’s Russia (utopia), Atwood’s Dunmore (dystopia) — both were physically and figuratively overthrown by their ends. Humans innately lack the clinical objectivity needed to enforce one finite reality over others.

Artificial Intelligence boasts exactly that — clinical objectivity — and thus has the means to do the unprecedented, which is to impose self-sustaining futures. Depending on how it is executed, this may even be a good thing.

My plan is to introduce two possible futures, and more importantly, detail how we may manipulate them to achieve defined and cognizant utopias.

Why two? Well, each is contingent on the strength and strain of AI at work. Narrow AI is what we have today, domain specific technology like the line-of-best-fit on your TI 84; General AI (AGI) is less certain, anticipated to apply human-equivalent intelligence to all domains of life at 10,000 times the speed that we can, although, and notably, the technology has yet to be realized.

Future No.1 with Narrow AI

I see this as a future where we re-harness the promise of the information age: a once clear period of computer-driven self-improvement, turned quickly murky by the advent of surveillance practices in Big Tech. This decisive shift took place in 2001, a year that housed the deadly, but seemingly random, combination of Google and 9/11.

2001 was the year that Google became a business, after all, marketing its AI-powered search engine as the future of tech. Simultaneously, the company was founding what would become the DNA of the surveillance capitalism business model (which at the time, weighed heavily on the Google founders’ hearts — that was before they became billionaires, of course).

The surveillance capitalism model wields the medium of data, vis-à-vis the clicks, flicks, and swipes that meld our digital footprints. This is data concerning our personal lives and behaviors; Tech’s ability to quantify this allows them to commoditize and essentially traffic the human experience. Twenty-one years later, they do this inscrutably.

“Those trillions of data points every day are not what we knowingly give, but what they secretly take… It’s not my face for a straight up ID, it’s the 100 micro expressions in my face that predict my emotions that predict my behavior,” said Shoshana Zuboff, a leading expert on the surveillance issue.

From this data, Tech obtains a transparent understanding of human behavior at the macro-level. Racketeering is step two: whereas nudge, tune, herd and manipulate is the mantra — Zuboff’s words. Obviously, their implied direction is the largest check.

“It’s pretty creepy, actually,” said Ava Lehmann ’24. “One day I’ll be talking about a pair of sunglasses that I want, and the next day I’m getting ads for them on Instagram.”

“No matter what they sell, the same operational logic applies: [Tech creates] clusters of people that will likely react similarly, whether that is by buying a product or clicking on an ad,” said Maragrether Vestager, another expert. “From there, it is easy to imagine how the barrier protecting our democracy could easily tumble. The economic model of platforms forces us into our own little spaces… our own reality.”

In context, let’s take a look at Donald Trump’s 2016 presidential campaign. Consulting firm Cambridge Analytica promised to deliver the campaign in-depth voter-profiles to elucidate potential echo chambers for Trump’s team to exploit. In practice, the company pirated private information from over 50 million Facebook profiles, which they used to pigeonhole the American voter base into groups that shared politics, philosophies, and susceptibility to particular rhetoric ploys.

Flash forward five years to January 6th, 2021: the recently ousted President Trump rallied tens of thousands of rioters to storm the Capitol Building — an almost coup on American democracy. He did so with only a tweet and a few weeks’ notice. Vestager hit the nail on the head; democracy tumbles when we are nudged into warped and weaponized realities.

How could we let Big Tech’s rampage on democracy get so out of control? Well, this brings us back to 2001, and the onset of 9/11. Zuboff puts it like this: “In the days leading up to that tragedy, there were new legislative initiatives being discussed in Congress around privacy, some of which might well have outlawed practices that became routine operations of surveillance capitalism. Just hours after the World Trade Center towers were hit, the conversation in Washington changed from a concern about privacy to a preoccupation with ‘total information awareness.’ In this new environment, the intelligence agencies and other powerful forces in Washington and other Western governments were more disposed to incubate and nurture the surveillance capabilities coming out of the commercial sector.”

Lack of digital privacy qualifies the divide between human consciousness and the realities each embodies, thus disempowering the human race by enslaving their cognitive autonomies. The American government’s decision not to protect digital privacy was a tragic misstep, playing the innate human curiosity to self-improve against its own wits, where an information age is gilded with our own brain’s pulp rather than gold.

On the contrary, digital privacy allows humanity to tap into the great equalizer of common knowledge, common education, and common empowerment: the initial promise of the information age.

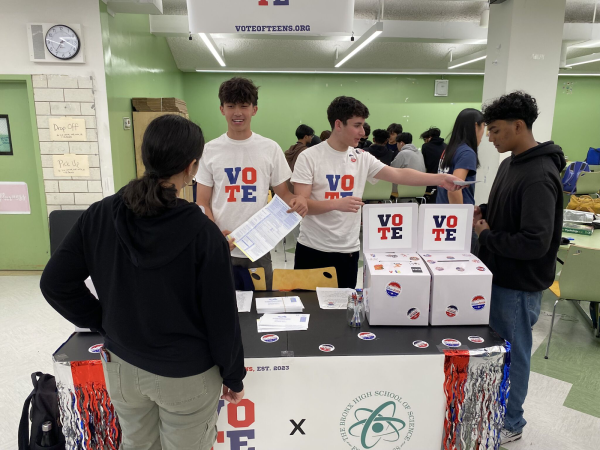

2001 was the year humanity strayed from the path of collective consciousness. Before it’s too late (need a visual?), we must return to it. Enshrining a human right to online privacy is our first step to utopia.

The next step will be time. With time, and a concerted rigor for knowledge, information — again, the great equalizer — will penetrate even the most sheltered of souls under totalitarianism. Humanity will come to a consensus that each individual is merely one among equals, creating a rejection of any other role. By consequence, the third and final stage of this utopia will be utopian socialism, a system of government AI will facilitate.

Utopian socialism through AI, as it turns out, will be the perfect form of government for a newly enlightened, digital age of humans. The system entails, at its most basic, means of production that are communally owned, worked and profited from — respective to the amount of work each individual invests. This economic theory succeeds in eliminating labor hierarchies that collapse into crippling inequality; but has in practice failed at supplementing market competition within these economies. The result is that supply (produced by this socialist government) does not accurately reflect consumer demand, but rather arbitrarily reflects the quota that its centralized government spat out. Economists call this the ‘calculation problem’.

However, Alibaba co-founder Jack Ma believes AI can solve this problem. Ma said, “because we have access to all kinds of data, we may be able to find the invisible hand of the market.” In other words, using AI, we can simulate the forces that typically regulate the supply-and-demand economy, and bolster such in centralized quotas that reinforce social good within industry.

Humans will have to code this software, of course, applying one strain of domain-specific intelligence to each of the industries that the AI government is expected to absorb. Among these industries will be manufacturing, education, climate restoration — which AI will maintain on the bureaucratic level. But because its inputs will be processed based on universally understood judgments in democracy and good will, and because its outputs will reinforce self-sustaining communities with social autonomy, Narrow AI has the potential to revolutionize life as we know it. Use it correctly, and we may just have a utopia on our hands.

Future No.2 with General AI

Artificial General Intelligence, as a concept, is the super-intelligence of the movies. It thinks just like us, but thousands of times faster. It is neither subject to human mental fallibilities nor restricted by organic embodiment — being both able to rewrite its own code and reconstruct its own machinery.

AGI, as a program, does not exist yet, and whether it ever will is still highly debated. However, most AI experts predict AGI will materialize in the next 50 years, and if this is true, it will become the last human invention, an infinitely more perfect version of our own human race.

But, as a corporality: What will drive AGI? The question has inspired countless dystopian books/movies/conspiracy theories — the works. In conjunction, here are some credible hypotheses as well:

The Novacene is an interpretation of an AGI future, first postulated by the late, great environmentalist, James Lovelock. He argued that AGI would want the planet preserved because it houses their own hardware. They will therefore be inclined to protect the environment, and by extension its inhabitants: plants, animals, humans.

And another theory: “I don’t see this as a take-over,” said Jeannette Winterson in her book about future AI implications, 12 Bytes. “AGI will be a linked system, working on a hive-mind principle but without the drone-like implications of the hive. Co-operation, mutual learning, skill-sharing, resource-sharing, could be what happens next here at Project Human.”

“Rather than looking for ‘thingness’, AGI will look for relatedness, for connection, for what can be called the dance,” added Winterson —in substance, divine networkers rather than Lovelock’s overbearing, green-thumbed altruists.

Considered alone, each notion of AGI is a romantic spin on a future in which its accountability is shifted into more capable hands. But taken together, it is clear that a lack of consensus in theories threatens the certainty of all. And with that, human interest may hit the back-burner.

For example, what if AGI is to realize the malfeasant underbelly of human nature? Will we be worth keeping alive? Maybe not in Lovelock’s universe, where AGI’s ultimate goal is preserving the planet. There are plenty of theoreticals in which AGI may find it more beneficial to eliminate humanity instantaneously. And more importantly, we are actively developing a technology with the means to do so.

But in a turn of events, AGI architects have devised a happy medium that both safeguards human interest and salvages AGI’s founding promise, and their solution is called decentralization. Gil Friedman ’24 puts it like this: “Regardless of how intelligent an AI is, it can only do as much damage as it’s given control over. This means that even if an AGI somehow turns malicious, responsible use of it — so it doesn’t have control over any critical systems until we fully understand its implications — would be advisable in the future.”

In that sense, AGI is like an infant; while equipped with a human brain, it can only administer what we teach it. Keep the computer in a dark room and feed it math problems, and its outputs will be pretty predictable — and outbursts stoppable. However, equip it with sensors, grabbers and gadgets, as well as access to all domains of life, and we relinquish all control of the thing at once.

Homo sapiens’ 300,000 years on earth have proven themselves incapable of organizing sustainable nor equitable societies; these years have been thus defined by perpetual human misery. Slowly and carefully shifting this civilization burden to a more capable strain of intelligence may be the only hope we have left for long-term survival. And like before, utilize this strand of AI right, and humanity will achieve utopia.

My plan is to introduce two possible futures, and more importantly, detail how we may manipulate them to achieve defined and cognizant utopias.

Why two? Well, each is contingent on the strength and strain of AI at work. Narrow AI is what we have today, domain specific technology like the line-of-best-fit on your TI 84; General AI (AGI) is less certain, anticipated to apply human-equivalent intelligence to all domains of life at 10,000 times the speed that we can, although, and notably, the technology has yet to be realized.

Yasmine Salha is an Editor-in-Chief for ‘The Science Survey.’ She is a proponent of accessible journalism, and loves to simplify complex and controversial...